Big O in probability notation

The order in probability notation is used in probability theory and statistical theory in direct parallel to the big-O notation which is standard in mathematics. Where the big-O notation deals with the convergence of sequences or sets of ordinary numbers, the order in probability notation deals with convergence of sets of random variables, where convergence is in the sense of convergence in probability.[1]

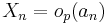

For a set of random variables Xn and a corresponding set of constants an (both indexed by n which need not be discrete), the notation

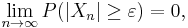

means that the set of values Xn/an converges to zero in probability as n approaches an appropriate limit. Equivalently, Xn = op(an) can be written as an op(1) where Xn = op(1) is defined as,

for every positive ε[2].

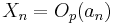

The notation

means that the set of values Xn/an is bounded in the limit in probability.